Good Artificial Intelligence (AI) governance doesn’t require a large technical team. It requires clear policies, practical processes, and an understanding of how artificial intelligence (AI) fits into your business operations. Many organizations already use AI tools in some capacity, but few have systems in place to ensure those tools are used safely, responsibly, and in a way that supports long‑term growth.

This final part of the series outlines a practical, realistic approach to building AI governance that your teams will actually follow.

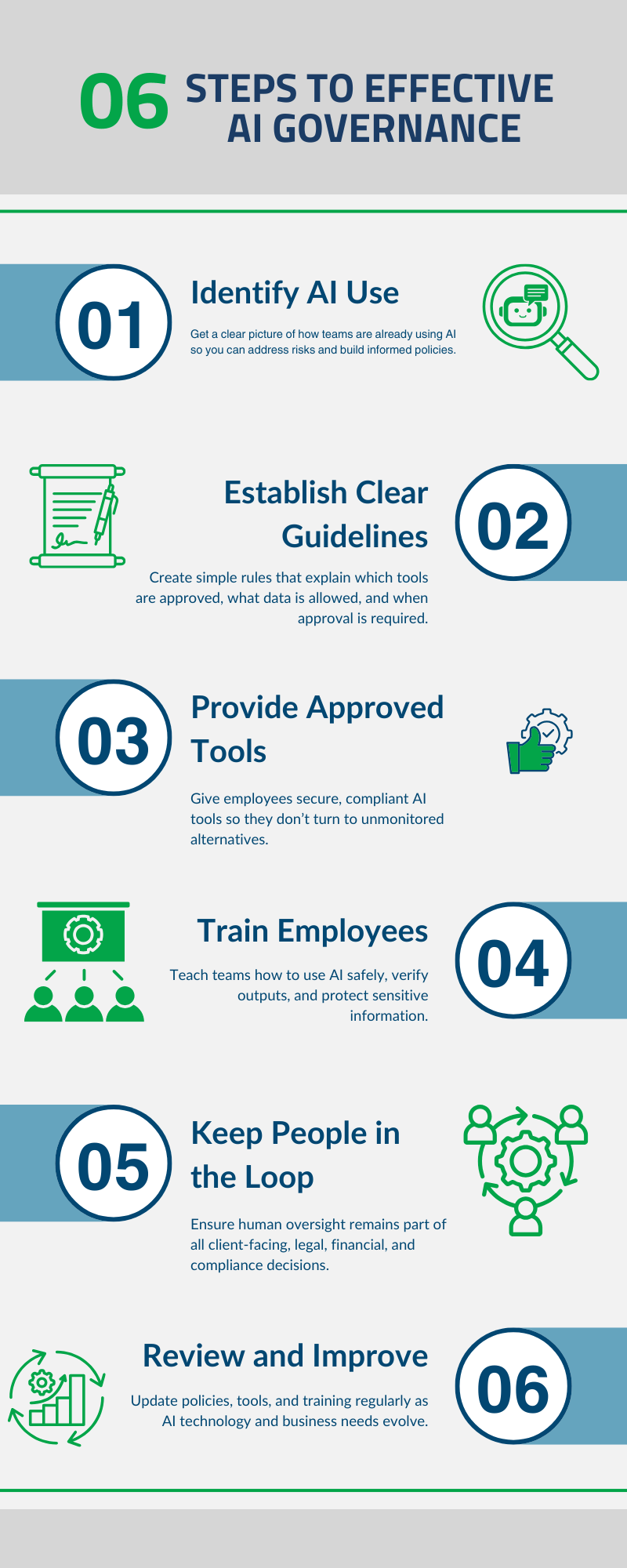

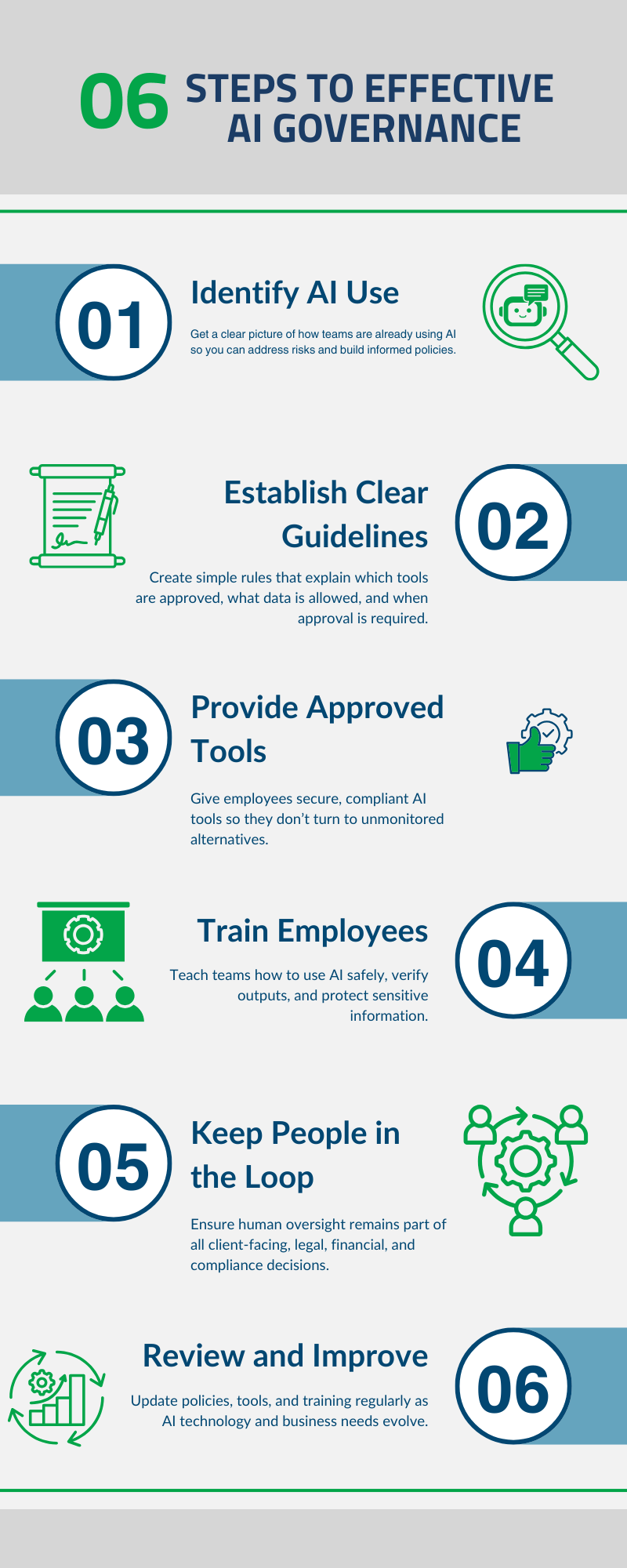

Step 1: Identify Current AI Use

Before creating policies or choosing tools, start by understanding how AI is already being used. This helps uncover risks, reduce guesswork, and build governance based on real behavior rather than assumptions.

Common ways to gather this information include:

- Anonymous employee surveys about AI usage

- Short conversations with department leaders

- Reviewing system or network logs for AI tool access

This gives you a clear picture of where AI is used, how often, and the types of tasks it supports.

Step 2: Establish Clear AI Usage Guidelines

Your first AI policy should be simple. The easier it is to understand, the more likely teams are to follow it. A clear, one‑page policy can help set expectations without overwhelming employees.

Your guidelines should define:

- Which AI tools are approved

- What types of information should never be entered into any AI tool

- When employees must get approval before using AI

- Who they should ask when they have questions

Short, practical policies help reduce risk and build consistent habits across teams.

Step 3: Offer Approved AI Tools

Shadow AI often happens because employees don’t have access to the right tools. When safe, approved tools are available, teams don’t need to rely on unmonitored alternatives.

Provide AI tools that include:

- Strong privacy protections

- Clear data‑handling policies

- Compliance features relevant to your industry

When employees have the right tools, unapproved tools naturally fall out of use.

Step 4: Train Employees on Responsible AI Use

Even the best tools and policies won’t work without proper training. Employees need to know how to use AI effectively and safely.

Training should cover:

- How to write clear prompts

- How to verify AI‑generated content for accuracy

- How to anonymize or sanitize data before using AI tools

- When to escalate questions or concerns

Training builds confidence, reduces errors, and supports a culture of responsible AI use.

Step 5: Keep People in the Loop

AI should assist decision-making, not replace it. Human oversight must remain part of any process involving:

- Client communications

- Financial or legal materials

- Compliance‑related content

- Automated outputs that affect customers

This helps ensure accuracy, protects your organization, and maintains accountability.

Step 6: Review and Improve Over Time

AI governance isn’t a one‑time project. It should evolve as technology advances and your business needs change.

A simple review process could include:

- Quarterly check‑ins with department leaders

- Monitoring usage patterns in approved AI tools

- Updating policies as new risks or tools emerge

Regular reviews help ensure AI governance remains relevant, effective, and aligned with business goals.

Ready to Build a Governance Framework That Supports Safe, Effective AI Use?

WSI helps organizations understand their current AI landscape, reduce risk, and build governance systems that integrate seamlessly into daily operations. A Quick‑Start Guide and AI Risk Assessment will be available soon.